When it comes to managing a WordPress site, there are several essential files and configurations that website owners need to be aware of. One such file is the robots.txt file, which plays a crucial role in determining how search engines interact with your site. The robots.txt file instructs web crawlers on which pages to crawl, index, or ignore, making it an essential tool for controlling your site’s search engine optimization (SEO) efforts. This article will guide you on how to access, modify, and utilize the robots.txt file in WordPress.

What Is Robots.txt and Why Is It Important?

Before delving into how to access the robots.txt file, it’s essential to understand what it is and why it matters. The robots.txt file is a simple text file that resides in the root directory of your website. It is read by search engine crawlers, such as Googlebot, Bingbot, or any other crawler, when they visit your site.

Search engines use the robots.txt file to understand which pages or sections of your website they should crawl and which ones they should ignore. This file can also be used to direct crawlers to important content or exclude certain pages from being indexed in search results. The robots.txt file can help you avoid having duplicate content indexed, which can negatively impact your SEO.

How to Access Robots.txt in WordPress

Accessing the robots.txt file in WordPress can be done in several ways, depending on your level of technical expertise and the tools you have available. Below are different methods for accessing and editing this file.

1. Accessing Robots.txt via the WordPress Dashboard

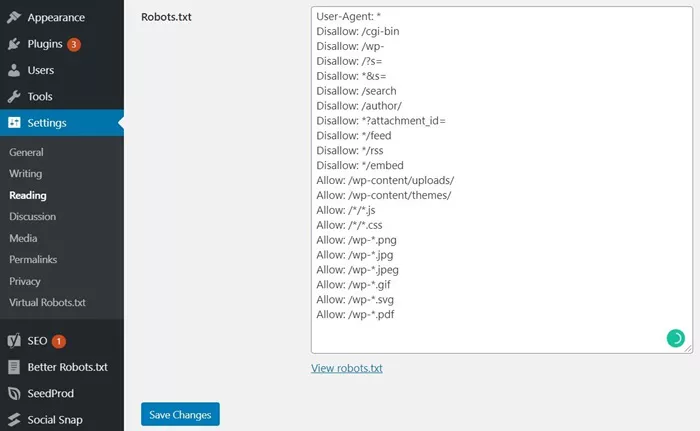

For beginners or those who prefer not to deal with code directly, WordPress offers an easy-to-use method of editing the robots.txt file using a plugin. Several SEO plugins allow you to modify the robots.txt file without needing to access the file through FTP or cPanel. Here’s how to do it using the popular Yoast SEO plugin:

Install and Activate Yoast SEO Plugin:

If you haven’t already, install the Yoast SEO plugin. Go to your WordPress dashboard and navigate to Plugins > Add New. Search for “Yoast SEO” and click “Install Now.” Once installed, activate the plugin.

Navigate to the Tools Section:

Once activated, go to the SEO menu in the left sidebar of your WordPress dashboard.

Click on ‘File Editor’:

Under the SEO menu, you will find the option to open the File Editor. Click on it to open the Yoast SEO file management tool.

Edit Robots.txt:

If the robots.txt file does not already exist, you will see an option to create it. If it exists, you can directly edit the file’s content. Add or modify the directives you want to use to manage search engine crawlers.

Save Changes:

Once you’ve made the necessary adjustments, simply click the “Save Changes” button to update the file.

2. Accessing Robots.txt via FTP

Another method to access the robots.txt file is through FTP (File Transfer Protocol). This method is ideal if you prefer manual access and modification of files.

Connect to Your Website via FTP:

Use an FTP client such as FileZilla or Cyberduck. You will need your FTP credentials, which you can usually find in your web hosting account.

Navigate to the Root Directory:

After logging into your server via FTP, locate the root directory of your WordPress installation. This is typically the folder named public_html or www.

Locate or Create the Robots.txt File:

Inside the root directory, look for the robots.txt file. If it does not exist, you can manually create a new text file and name it robots.txt.

Edit the Robots.txt File:

Open the robots.txt file using a text editor and make your desired changes. You can use the common syntax of “User-agent” and “Disallow” directives to control which search engine bots can crawl your site.

Save and Upload the File:

After editing, save the changes and upload the updated file back to your server if you had to create it locally.

3. Accessing Robots.txt via cPanel File Manager

If you’re using a web hosting provider that offers cPanel, accessing the robots.txt file becomes relatively simple. The cPanel File Manager allows you to view, edit, and create files directly on your server without needing additional software.

Login to cPanel:

First, log into your cPanel account. This can usually be accessed by navigating to yourdomain.com/cpanel.

Open File Manager:

Once logged in, find and click on the File Manager under the “Files” section.

Navigate to the Root Directory:

In the File Manager, go to the root directory of your WordPress installation (typically public_html or www).

Find or Create the Robots.txt File:

Look for the robots.txt file in the root directory. If it’s not there, you can create a new file by clicking the + File button.

Edit the Robots.txt File:

Right-click on the file and select Edit to make changes. You can use the built-in editor to modify the content of the file.

Save Changes:

After editing, save the file, and your changes will be immediately applied.

What Can You Include in Your Robots.txt File?

The robots.txt file uses a specific syntax to control how search engines should interact with your site. Below are the common rules and directives that can be used in the file.

User-agent

This directive specifies the search engine or crawler that the rule applies to. For example:

- User-agent: Googlebot – This rule applies only to Google’s crawler.

- User-agent: This rule applies to all search engine bots.

Disallow

The Disallow directive tells search engines which pages or directories they should not crawl. For example:

- Disallow: /private/ – This will prevent bots from crawling the “/private/” directory.

Allow

If you want to allow access to specific pages within a disallowed directory, you can use the Allow directive. For example:

- Disallow: /private/

- Allow: /private/public-page/ – This will disallow the entire “/private/” directory, but allow bots to crawl the “public-page” inside it.

Sitemap

You can also use the Sitemap directive to point search engines to your XML sitemap, which helps them find all the pages you want indexed. For example:

Sitemap: https://www.example.com/sitemap.xml

This directive tells search engines where to find your website’s XML sitemap.

Best Practices for Using Robots.txt in WordPress

Using the robots.txt file effectively requires understanding the potential impact on your website’s SEO. Here are some best practices to keep in mind:

-

Be Cautious with Disallow Directives: Avoid blocking important pages that should be crawled by search engines, such as your homepage or essential category pages. Blocking the wrong pages could hurt your rankings.

-

Don’t Block Search Engines Entirely: Some website owners mistakenly block search engines from crawling their entire site by using “Disallow: /”. This can prevent your site from appearing in search results.

-

Use Robots.txt in Combination with Meta Tags: If you want more granular control over how search engines interact with specific pages, consider using noindex, nofollow meta tags in addition to your robots.txt file.

-

Keep Robots.txt Files Simple: While it’s tempting to add many rules, keeping the robots.txt file simple and clear will make it easier to manage and less prone to errors.

-

Test Changes: Always test your robots.txt changes using tools like Google Search Console to ensure that the directives are working as expected and not blocking important pages.

Conclusion

Accessing and managing the robots.txt file in WordPress is an important aspect of maintaining good SEO practices. Whether you choose to use a plugin like Yoast SEO, access the file via FTP, or manage it through cPanel, understanding the file’s function and how to control it can have a significant impact on your site’s visibility in search engine results. By carefully using the robots.txt file, you can ensure that your site is optimized for search engines while also keeping unwanted bots and pages out.

Related Topics

- How to Access CPanel WordPress?

- How Often Does WordPress Update?

- How Much Traffic Can WordPress Handle?